On January 16th, 2025 the CFPU wrapped up the second 2025 AI Winter School. When we first offered this program last year, there were 475 registrants; this year the number of registrants quadrupled to almost 2,000. We welcomed registrants from 37 countries, drawn from over 100 colleges and universities, as well as institutions such as Fermilab, CERN, SLAC, and the Brookhaven, Los Alamos, and Lawrence Berkeley National Laboratories. Within the Brown community, we had registrants from the Departments of Physics, Applied Math, DEEPS, Chemistry, and Engineering.

On behalf of the AI School Organizing Committee, we are deeply appreciative of the support we've received from Brown Physics and beyond.

We wish to thank our Physics faculty presenters this year, Ian Dell'Antonio and Loukas Gouskos, and CFPU Fellow Shawn Dubey for developing and presenting an additional Introductory module. We were very fortunate to welcome two Brown Physics alumni, James Verbus (LinkedIn) and Michael Luk (Deloitte/SFL Scientific), as presenters this year; as well as Yanting Ma (Mitsubishi Electric Research Laboratories) and Alexis Johnson (Deloitte/SFL Scientific). We wish to thank them for their outstanding presentations and for the extensive time and effort they dedicated to preparing their modules.

Many thanks are also due to our esteemed panelists at our opening round-table: Max Tegmark (MIT), Kyle Cranmer (University of Wisconsin-Madison), and Jim Halverson (Northeastern University).

Numerous faculty contributed their time and expertise to the development of the 2025 School and its topics, including Rick Gaitskell, Ian Dell'Antonio, Jennifer Roloff, Matt LeBlanc, Loukas Gouskos, Jonathan Pober, Gaetano Barrone, and CFPU Fellows Shawn Dubey and Madhurima Choudhury.

Brown Physics students were instrumental in the development and presentation of the 2025 AI Winter School. Special acknowledgment is due to Woody Hulse, Lazar Novakovic, and Philip LaDuca for developing and co-presenting modules during the 2025 School alongside Physics Department faculty. Chongwen Lu, Chen Ding, Lazar Novakovic, and Philip LaDuca all provided critical support this year.

Hearty thanks are also due to Valerie DeLaCámara for helping to boost registration to new heights, Michael Antonelli for his technical support and the entire team at Brown Media Services for their invaluable assistance during this virtual event.

All modules will be available soon on the Brown Physics Department's YouTube channel and on the AI School's Indico site.

Warm regards,

The Organizing Committee

Richard Gaitskell, Director, Ian Dell’Antonio, Associate Director, Jennifer Roloff, Jonathan Pober, Matt LeBlanc, Loukas Gouskos, Gaetano Barone, Shawn Dubey, Madhurima Choudhury, Ka Wa Ho

2025 AI Winter School Presenters and topics modules:

Presenter: Shawn Dubey

Title: Introductory Module

Description: The introduction to the virtual AI Winter School. A short introductory lecture to AI/ML and then a brief hands-on session introducing participants to the basics of using Google Colab, the required platform for this winter school. This module is particularly geared toward those with no knowledge of AI/ML. Recommended prerequisite to other modules for those coming in with no background in machine learning.

________________________________________________________________________

Presenter: Yanting Ma (MERL, Mitsubishi Electric Research Laboratories)

Title: Physics-Inspired Operator Learning for Inverse Scattering with Application to Ground Penetrating Radar

Description: Ground Penetrating Radar (GPR) provides a non-destructive solution for underground utility mapping. The data acquisition process involves emitting known electromagnetic wave into the subsurface and recording the scattered wave above the ground. The goal for inverse scattering is to estimate the spatial distribution of the electric permittivity of the subsurface based on the received scattered wave.

Fourier Neural Operator (FNO) has been used to model time-domain wave propagations. One main challenge of such learned simulators is the error accumulation during temporal unrolling. In this module, we introduce our modification to the FNO architecture that is inspired by the iterative Born approximation to the frequency-domain integral equation for scattering. We demonstrate the effectiveness of the learned forward operator by applying it to the inverse scattering problem in a GPR setting.

________________________________________________________________________

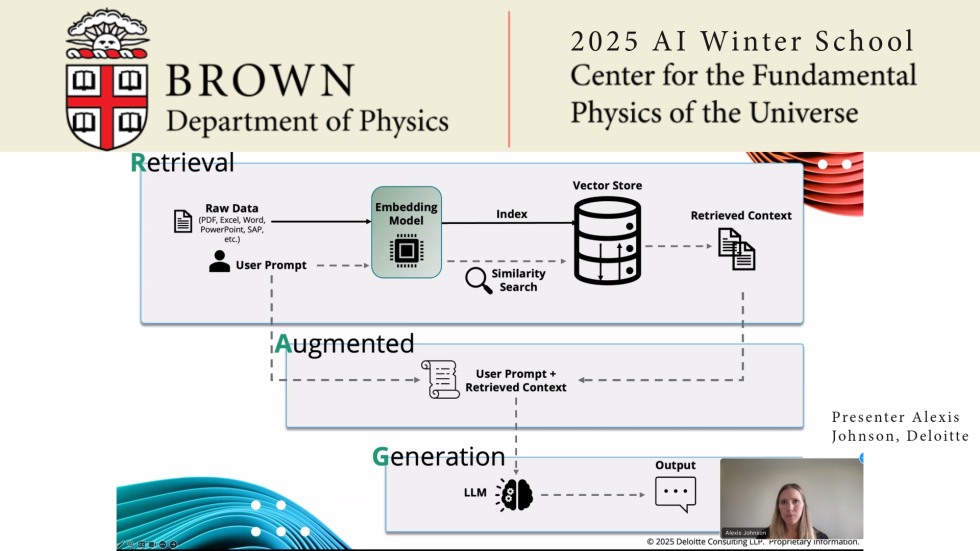

Presenter: Michael Luk and Alexis Johnson (Deloitte, SFL Scientific)

Title: Generative AI with physics and industry applications

Description: This module introduces fundamental concepts of Artificial Intelligence; provides an overview of Generative AI and its applications, offers hands-on experience with AI and Gen AI models and tools, and highlights industrial and physics-related applications.

________________________________________________________________________

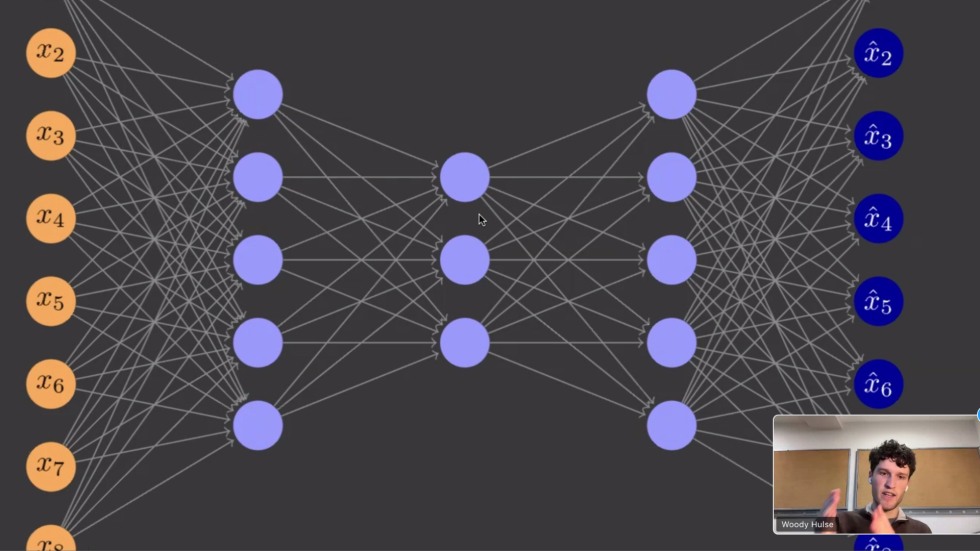

Presenter: Shawn Dubey and Woody Hulse

Title: Machine Learning for Data Compression in Dark Matter Direct Detection Experiments

Description: The LUX-ZEPLIN (LZ) dark matter direct detection experiment searches directly for dark matter particle interactions in a 10-tonne liquid xenon target. LZ collects about 50 MBytes per second of detector data across its 494 photomultiplier tubes (PMTs), or around 1.5 PBytes (1.5 million GB) per year. The utility of this data naturally becomes constrained by its scale–to efficiently analyze this quantity of information, we need to achieve compressed, rich representations of the data. Artificial neural networks (ANNs) have long been applied as an effective way to classify, model, and reason over large quantities of data due to their ability to learn over vast hyperspaces. Autoencoders (AEs), deep neural networks composed of encoder and decoder components, are one method used in particular for the task of compressing data. In this module, participants learn about the formulation, benefits and limitations of AEs and leverage them for applications to LZ data compression, which can also be useful in other areas of research.

________________________________________________________________________

Presenter: Ian Dell’Antonio and Philip Laduca

Title: Using unsupervised learning to find interacting and starburst galaxies

Description: Galaxy interactions are laboratories for dark matter physics, star formation and galaxy evolution. Interacting and starburst galaxies can be detected in deep imaging surveys but represent a small fraction of the tens of millions of galaxies. Furthermore, interactions occur in many different scenarios and have different signatures, such as tidal tails, resonances or rings, and disrupted disks, which complicate the training of supervised learning networks. A promising technique for selecting candidate interacting galaxies involves constructing feature-distance maps to organize the images of galaxies then find groupings in feature space. In this module, this unsupervised technique is demonstrated both standalone and in conjunction with a supervised network as a pre-filter for potentially interesting features based on galaxy images taken from the Dark Energy Camera in Chile. Participants come away with an understanding of the broad applicability of the technique beyond optical astronomy to general anomaly detection in image collections.

________________________________________________________________________

Presenter: Loukas Gouskos and Lazar Novakovic

Title: Development and Deployment of Graph Neural Networks in Particle Physics

Description: In recent years, Graph Neural Networks (GNNs) have emerged as a transformative tool in particle physics, offering a powerful framework for analyzing complex, non-Euclidean data structures such as particle interactions and detector outputs. This session provides an exploration of GNNs, bridging their theoretical foundations with practical applications in particle physics. We highlight their relevance to cutting-edge research at CERN (e.g., ATLAS and CMS), where GNNs are revolutionizing tasks such as particle identification, event classification, and detector signal reconstruction. The hands-on section features two critical tasks that showcase the importance of GNNs: a supervised task (particle identification) and a weakly supervised task (particle shower reconstruction). We use Python-based frameworks and tools (i.e., PyTorch) widely used for GNN development and deployment.

________________________________________________________________________

Presenter: James Verbus (LinkedIn)

Title: Overview of Large Language Models (LLMs), RAG, and strategies for input moderation using LLaMa guard.

Description: This module provides an exploration of LLM tools and techniques, including using OpenAI API, an open LLaMA model, retrieval-augmented generation (RAG) for improving AI system performance with external data, and strategies for safeguarding LLM implementations with LLaMa guard. We set up an LLM using both OpenAI’s API and a locally installed LLaMa model, build a basic RAG system to demonstrate how external data can be leveraged to enhance AI outputs and show how LLaMa guard can moderate input prompts to ensure safe and appropriate responses in practical applications. Participants leave with a familiarity of and the ability to use these LLM tech stack components.

Watch this space and our social media for news of the 2026 AI Winter School!